This is an exploration in two parts, inspired by this article by Julio Merino. In it he talks more about this tweet, which shows a 600 Mhz system from the turn of the millenium opening apps significantly faster than modern machines with SSDs and 400 GBps of memory bandwidth.

He goes into detail on why he thinks this is, one of them being a prioritization of developer time. As someone who builds and delivers slow software, I'd like to offer two other reasons - perhaps as a way to ask if they are valid.

The first reason is that building fast functionality is easy, but delivering it to all potential users across the diversity of ways humans exist is difficult - and this encounter with the world at large makes our software accumulate patches and patches of duct tape.

Software isn't alone here. Look at a well used machine shop, and you'll notice that every machine - except for the new ones - is marked up, dinged, shimmed and adjusted, sometimes beyond the point of recognition. Each shim, each marking, each wrong screw in the right hole is something that was added to meet an edge case that the designers couldn't think of, or didn't make sense to accomodate.

This is why most software comes to life fast and elegant, and remains this way only if it fails. Success usually involves a mad scramble to fit edge cases - even when you're lucky enough to live in a walled garden (like the Selfish Giant, one of my dad's favorite stories). Even if you have the time, which you never do, adjusting for these edge cases requires shims and duct tape that forms scar tissue which resists unpacking.

More importantly for us users, it means that things get slower. This is especially true if you were already in a supported path, because your path through the system now accumulates conditionals meant to serve someone else in the best case, and perhaps no one in the worst case.

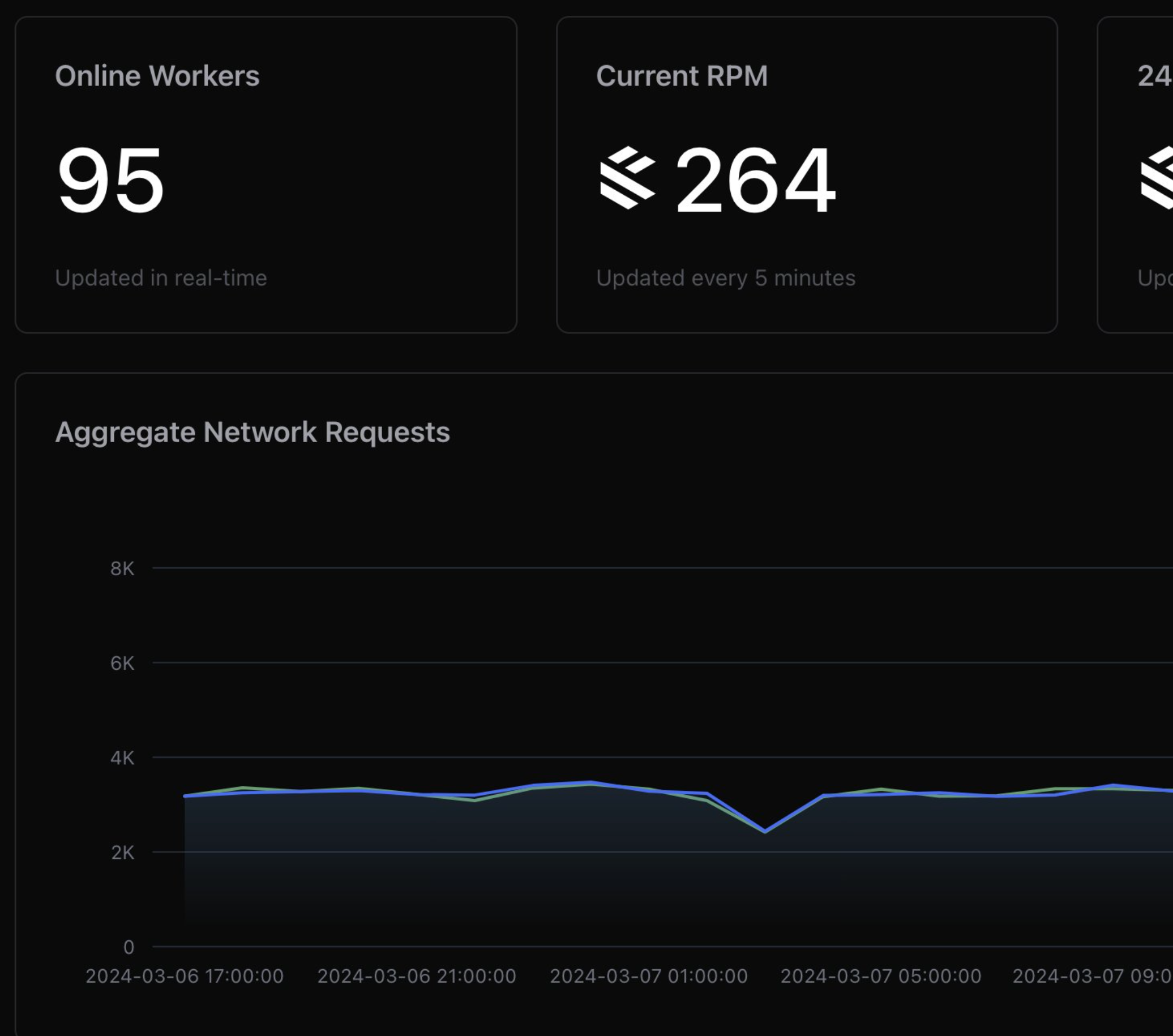

It's hard to understand the magnitude of this process - often, software gets slower faster than our computers get quicker.

§Let me illustrate

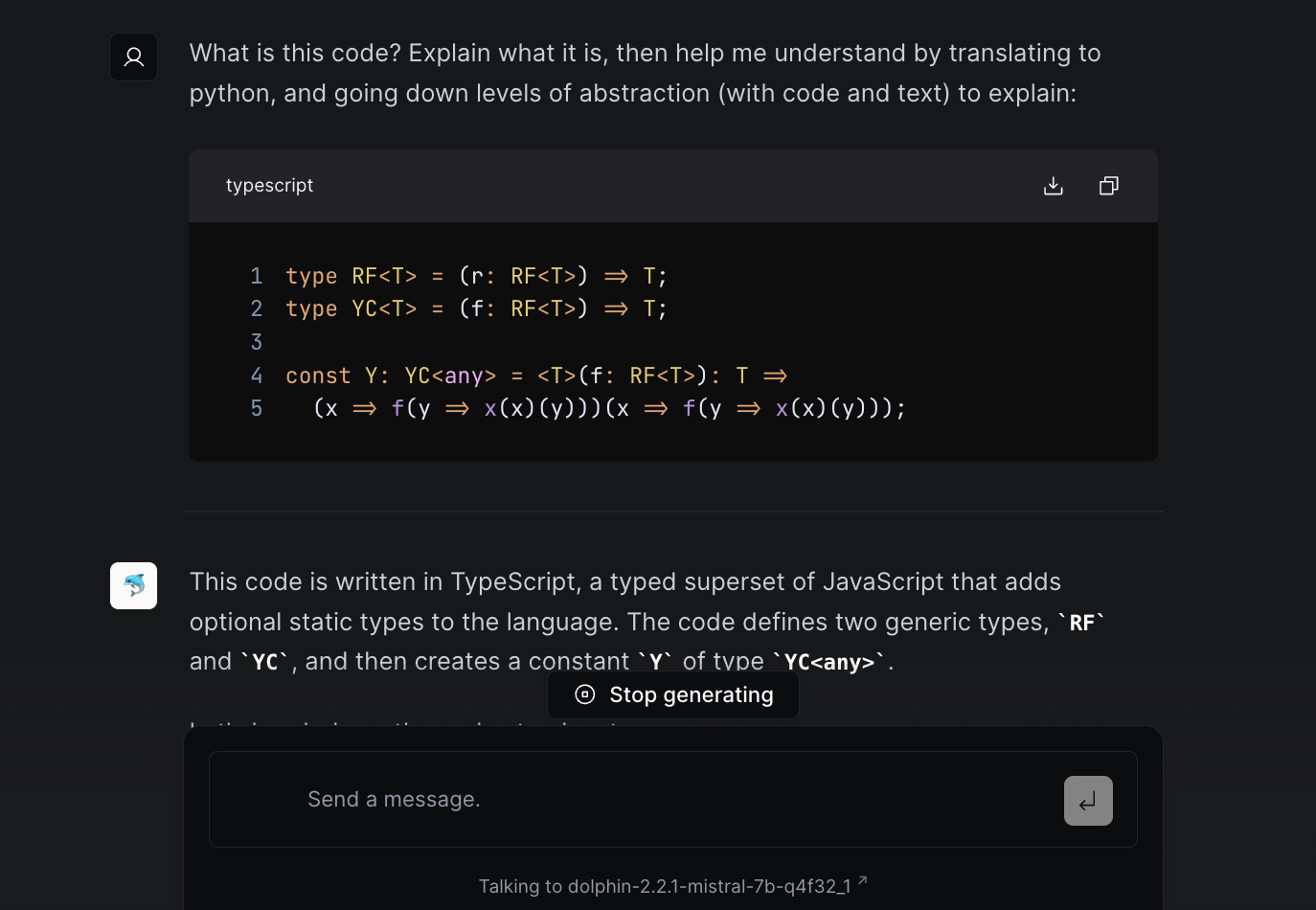

I can provide two concrete examples here. First, if I look through the codebases I maintain, and look at just the code we've written, the complexity - especially at start time - comes from handling edge cases. These can be edge cases in state from older versions, validation routines to make sure corrupted data doesn't make it through, polyfills and shims for supporting more platforms, accessibility layers to support more types of users, and general business logic patchwork that supports more customer workflows.

Second, if I look through our dependencies, a good chunk of them are big for the same reason, only they need to support a different Venn set of users and protocols than us.

Both of these things don't affect newer, younger systems. In fact, we could rewrite our dependencies ourselves and get significantly faster code, but we will likely be doomed to add the same duct tape they have, except they'll be written in our users' blood (as the FAA likes to say).

§Other, fixable reasons

This is the major, unfixable reason that I can see. There are indeed problems just as large that are solvable (or partly so), but they exist at a systemic level.

The first is how our package ecosystems encourage code reuse without designing our interfaces to be selective in what comes with this reuse. Often you'll import something for a banana, but you don't just get a banana - you get the monkey holding it, the tree its sitting in, and the forest that tree came with.

The second is that most of our software - for better of worse - is online today. Users have gotten used to automated cloud saves, closing one machine and picking up in another, and seamless updates at startup. All of this means that startup has become an incredibly complicated process that involves network partitions and state-logic mismatches at every level. Sometimes simply delaying functionality is the cheapest way to let these things settle and resolve, unless you want to use a SAT solver to figure out every race condition that might exist.

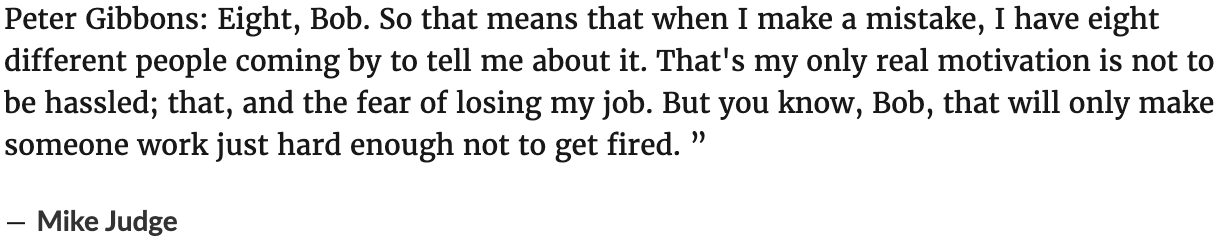

The third is perhaps another way to look at companies prioritizing developer time - ship speed. Most software (like Spotify, the example used in the article) moves fast - again, for better or for worse - so the ability for developers to exist in those codebases and make improvements is prioritized over the actual speed of the app itself. Users have shown time and time again - even in our industry- that they prefer new features delivered quickly over app speed. Of course, this is to a point. Much like PIPs with developers, users care when an app is slow - but it'll only make us work so it's just fast enough.

That's the first reason - how the past of a large system contributes to how slow it becomes. Next time we'll talk about how carrying the weight of the future of a system also makes it slower.

Not a newsletter