Language models like GPT-4 have been getting smaller and faster, while also becoming smarter. So small in fact that you can run a pretty smart model like Mistral or llama-2 entirely in the browser. This has huge applications for privacy, cloud costs and customization. Imagine running multiple specific finetunes of a model specialized to your product, entirely inside your application. Today's models at the 7B level (which quantized to q4 are about 4 GB in size and small enough to persistently cache in the browser) are perfect for most NLP tasks, conversational assistance and writing.

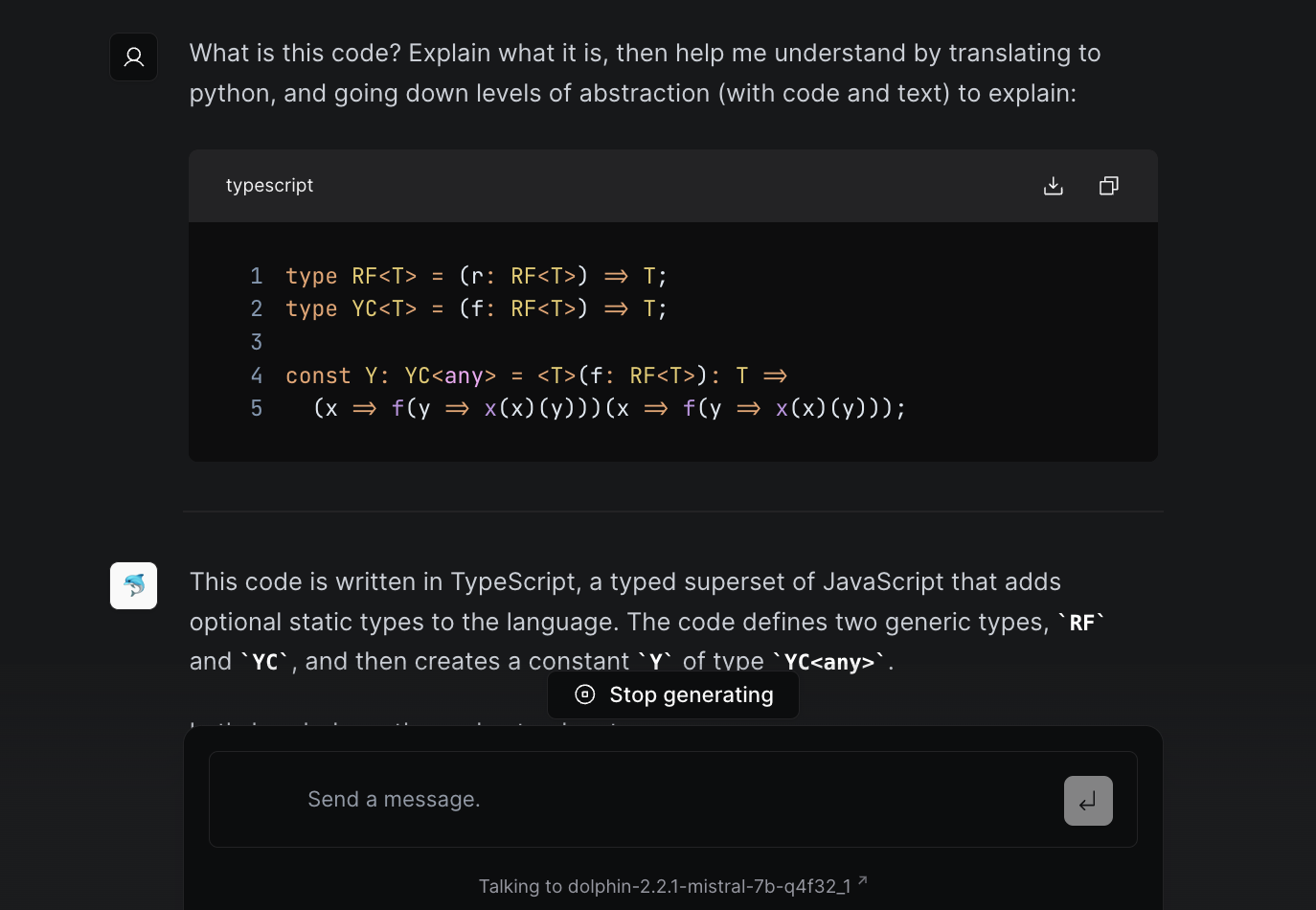

Here's a small demo of the dolphin-2.2.1 model compiled using these steps: WASM-AI. This repo has a starter template to run and distribute models you compile here.

§How to compile

-

Create a new anaconda environment. Python 3.10 is preferred:

conda create -n mlc-310 python=3.10 conda activate mlc-310 -

Install the appropriate version of tvm following the guide here. In our case, we'll install for cuda 11.8, but if you're on Apple M1 or other platforms, you can adjust the package based on instructions.

python3 -m pip install --pre -U -f https://mlc.ai/wheels mlc-ai-nightly -

For safety, let's make sure we have torch and other necessities (guide here):

conda install pip pytorch==2.0.1 torchvision==0.15.2 torchaudio==2.0.2 pytorch-cuda=11.8 -c pytorch -c nvidia -

If you're on an Nvidia GPU, we want to make sure nvcc is in our path. If it's not, we need to link it in:

export PATH="/usr/local/cuda-11.8/bin:$PATH" export LD_LIBRARY_PATH="/usr/local/cuda-11.8/lib64:$LD_LIBRARY_PATH" -

Grab the mlc-llm repo from here:

git clone https://github.com/mlc-ai/mlc-llm.git cd mlc-llm -

For mistral models, for some reason trying to compile with the latest release caused problem after problem. This is the last known commit we know to work, as suggested by the insanely helpful felladrin, with more details here.

git checkout 1e6fb11658356b1c394871872d480eef9f2c9197 -

Now we can init the submodules.

git submodule init git submodule update --recursive -

We now need to install EMSDK so we can compile to wasm.

-

Make sure to link it in to PATH, so you can run

emcc -versionto get a valid output. The guide above should instruct you on how. -

Set

TVM_HOME:export TVM_HOME=/path/to/mlc-llm/3rdparty/tvm -

Run the test commands here to validate that you're all good!

-

Now - again! - we need to pull forward the tvm relax module to a working commit:

cd 3rdparty/tvm git fetch git checkout 5828f1e9e git submodule init git submodule update --recursive make webclean make web cd ../.. -

We now also need to fix a float16-32 error by replacing all float16's with float32's in the rotary embeddings file:

sed -i '.bak' 's/float16/float32/g' mlc_llm/transform/fuse_split_rotary_embedding.py -

If everything works, you're good to go. Let's try compiling

dolphin-2.2.1:python3 -m mlc_llm.build --hf-path ehartford/dolphin-2.2.1-mistral-7b --target webgpu --quantization q4f32_1 -

Last bit - once you have the compiled wasm, you need to look inside the model folder (

/dist/dolphin-2.2.1-mistral-7b_q4f32_1/paramsin our case) to find `mlc-chat-config.json. Inside, you want to update the conversation config and template to:"conv_template": "custom", "conv_config": { "system": "<|im_start|>system: You are an AI assistant that follows instructions extremely well. Help as much as you can.", "roles": ["<|im_start|>user", "<|im_start|>assistant"], "seps": ["<|im_end|>\n"], "stop_str": "<|im_end|>" },

§Running the model

Once you're done, you can upload your model to Huggingface so that hosting is taken care of. If you're more technically inclined - or testing - you can also simply host your model locally (run npx http-server --cors="*" in the model folder).

Once you have it somewhere, you're looking for the params folder, and the wasm file that was just generated. In the example repo, you can edit this file with the model names to add your model's files, and restart the project. You should now be able to run your model in the browser!

This guide is likely to be reproducible further into the future, but do check the web-llm and mlc-llm repositories to see if there's an easier path now. The team improves things all the time, so even though this guide will work, there might be better ways later. Keep an eye on this thread.

Not a newsletter