RSI is something that scares the bejeezus out of me. As someone who works with their fingers, losing the ability to type - or even losing my current proficiency to talk to my system - would feel like losing the ability to walk. I have had multiple family members affected with RSI, and the anecdotal evidence of it showing up out of nowhere has led me down the path of mobility work, sparring and doing many, many preventative things in the hope of giving myself as much useful time with my hands as I can. I do occasionally get wrist pain that needs me to stop working, but thankfully nothing has stuck.

I also wonder what I would do if I did find myself on the other side. I've kept an eye on voice to code interfaces, but the learning curve has always looked steep, and full of memorised voice commands. For a field that changes quickly, this can be a problem.

With the introduction of ChatGPT and Whisper, it has been an exciting week to say the least. Whisper.cpp, llama.cpp and now alpaca.cpp have hinted at how good local tools are about to get, and drove me down a quick study of what we can do today in this regime.

§The test

I'll pick something that I can read aloud - as I would think of the code to myself - and we'll see how much gets through the system. With something as pedantically processed as source code, we should be able to compare line for line and see where we could do better.

Where do we start?

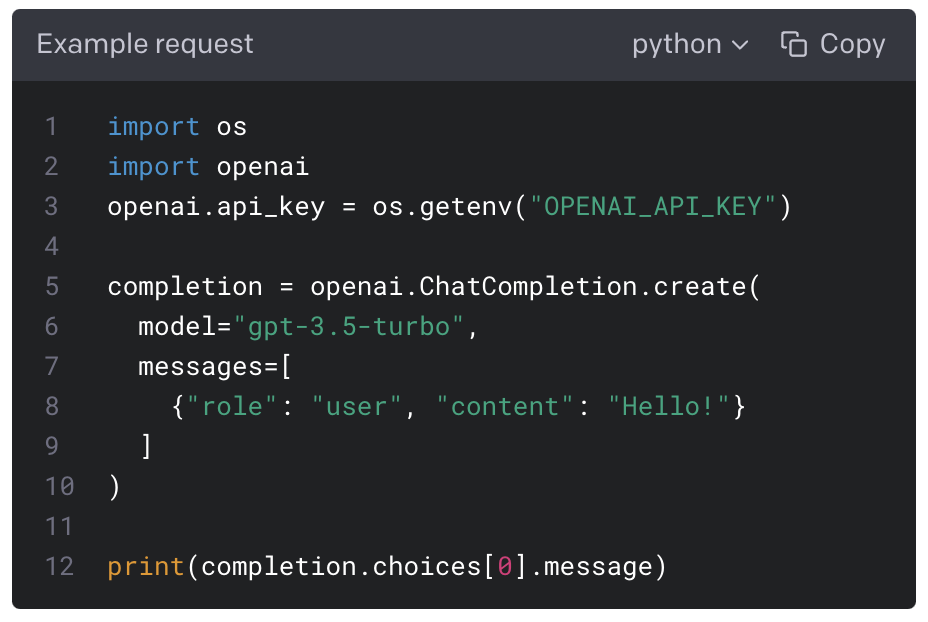

Let's try the OpenAI code snippet for python to connect to the Chat Completions API.

import os

import openai

openai.api_key = os.getenv("OPENAI_API_KEY")

completion = openai.ChatCompletion.create(

model="gpt-3.5-turbo",

messages=[

{"role": "user", "content": "Hello!"}

]

)

print(completion.choices[0].message)

Here's the audio of me describing it. Let's convert it to the 16 khz wav using ffmpeg, and give it to the large whisper model:

ffmpeg -i ~/Downloads/Whisper.cpp.m4a -acodec pcm_s16le -ar 16000 ../testscripts/whisper.cpp.wav

./main -m models/ggml-large.bin -t 4 ../testscripts/code.wav --print-colors --output-txt

§Asking nicely

Did you know you could prompt Whisper? This is primarily being used for vocabulary injection, but we'll try using more detailed prompts to see if it can understand what we tell it - it should. First we'll try no prompts at all.

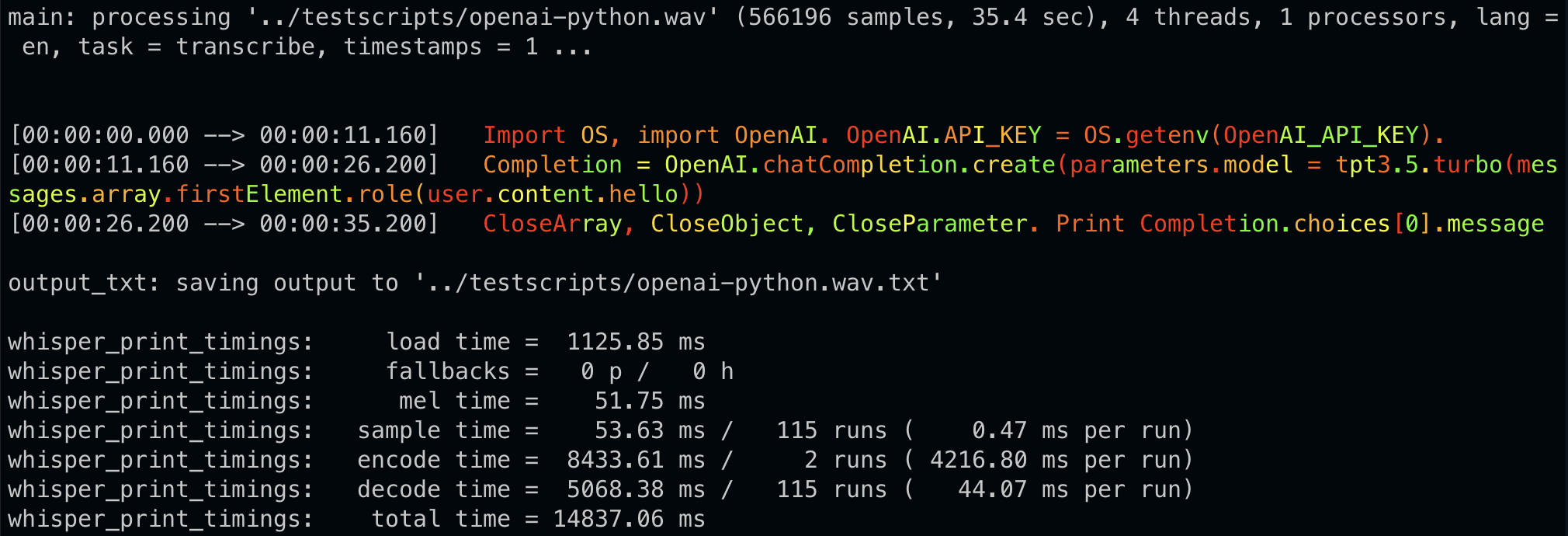

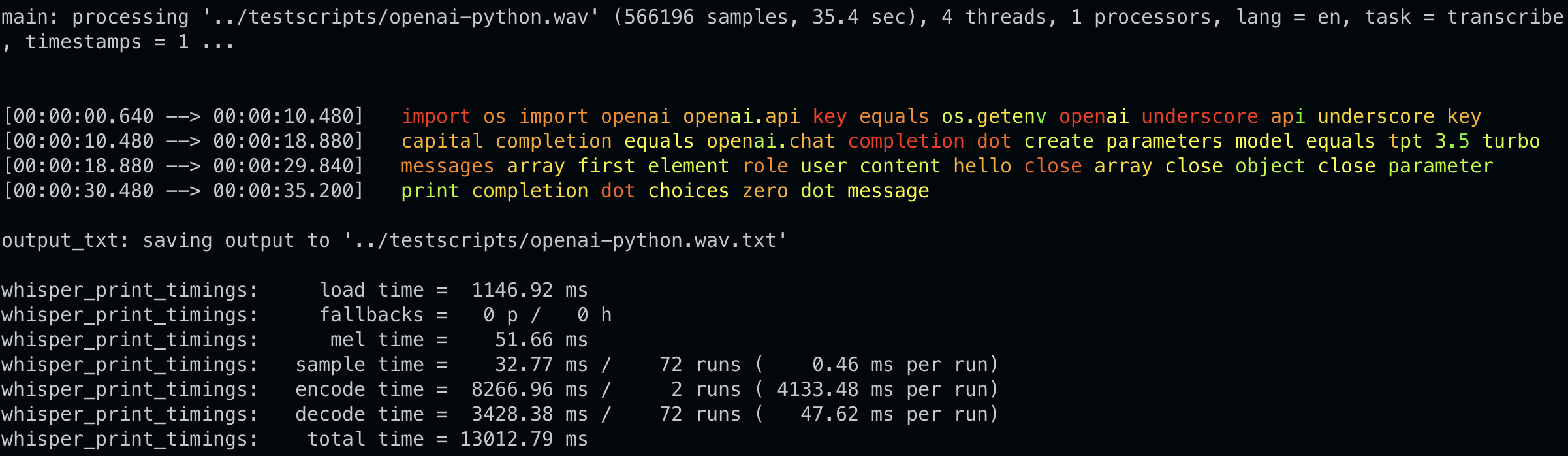

Prompt: None

We get a pretty garbled string back that would be pretty unusable as code.

Import OS, import OpenAI. OpenAI.API_KEY = OS.getenv(OpenAI_API_KEY).

Completion = OpenAI.chatCompletion.create(parameters.model = tpt3.5.turbo(messages.array.firstElement.role(user.content.hello))

CloseArray, CloseObject, CloseParameter. Print Completion.choices[0].message

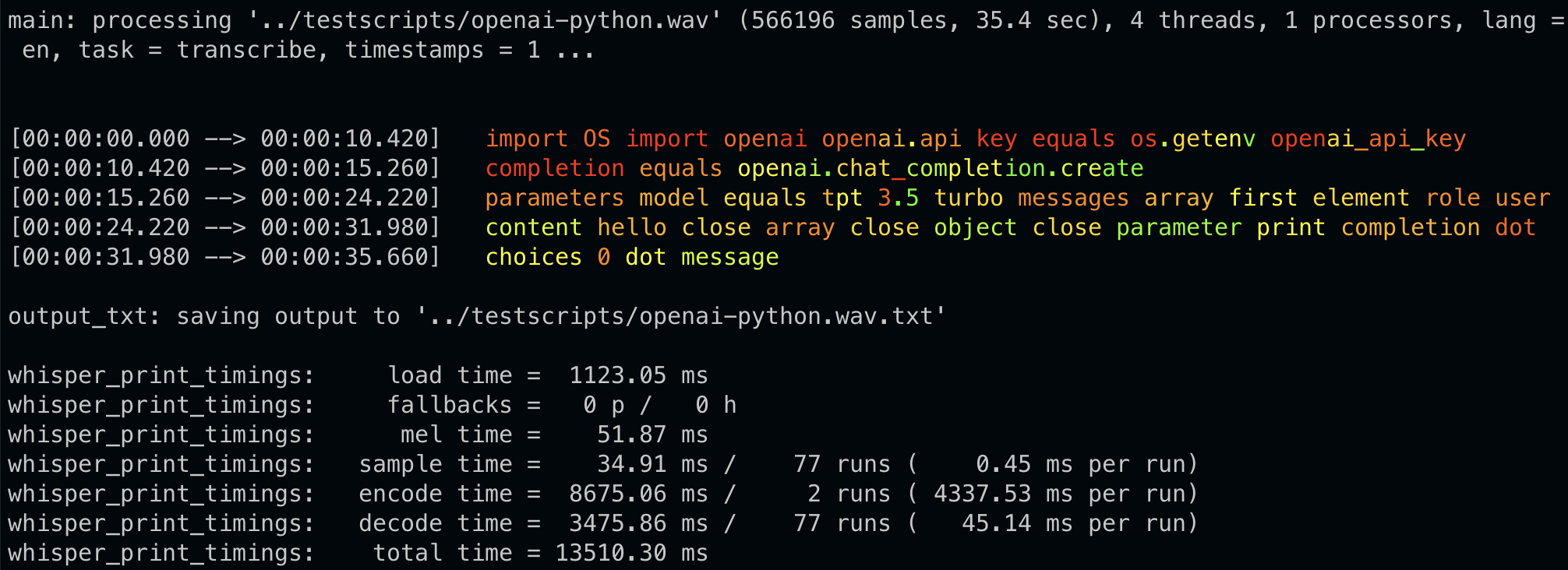

Let's try telling it what we're doing. The prompt can be Reading the openai python API out loud -:

import OS import openai openai.api key equals os.getenv openai_api_key

completion equals openai.chat_completion.create

parameters model equals tpt 3.5 turbo messages array first element role user

content hello close array close object close parameter print completion dot

choices 0 dot message

That did next to nothing, but I think some of the capitalization is gone. It might actually understand what we're saying.

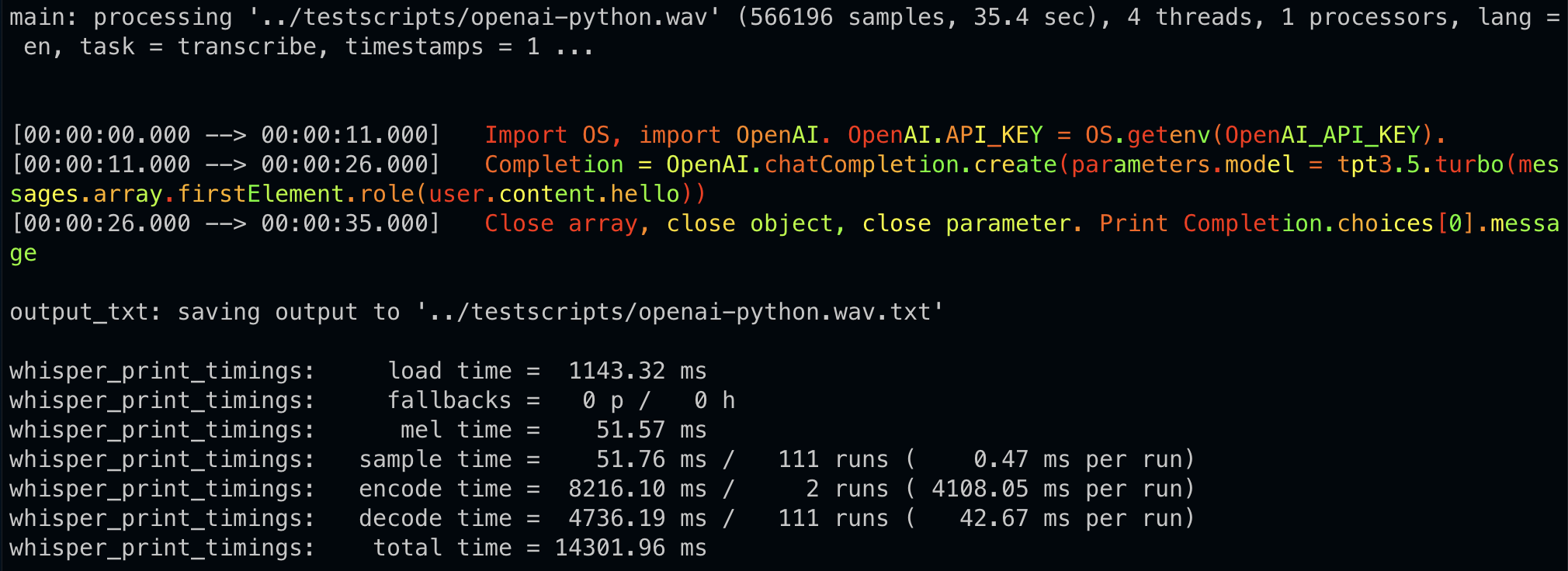

One big change we want to make is to get rid of the written special characters and turn them into the actual characters. The prompt is convert spoken characters into the characters themselves:

Import OS, import OpenAI. OpenAI.API_KEY = OS.getenv(OpenAI_API_KEY).

Completion = OpenAI.chatCompletion.create(parameters.model = tpt3.5.turbo(messages.array.firstElement.role(user.content.hello))

Close array, close object, close parameter. Print Completion.choices[0].message

Not there yet, but a hell of a lot better.

Let's try combining our prompts to see if that works better. The prompt is convert spoken characters into the characters themselves. Reading the openai python API out loud -:

import os import openai openai.api key equals os.getenv openai underscore api underscore key

capital completion equals openai.chat completion dot create parameters model equals tpt 3.5 turbo

messages array first element role user content hello close array close object close parameter

print completion dot choices zero dot message

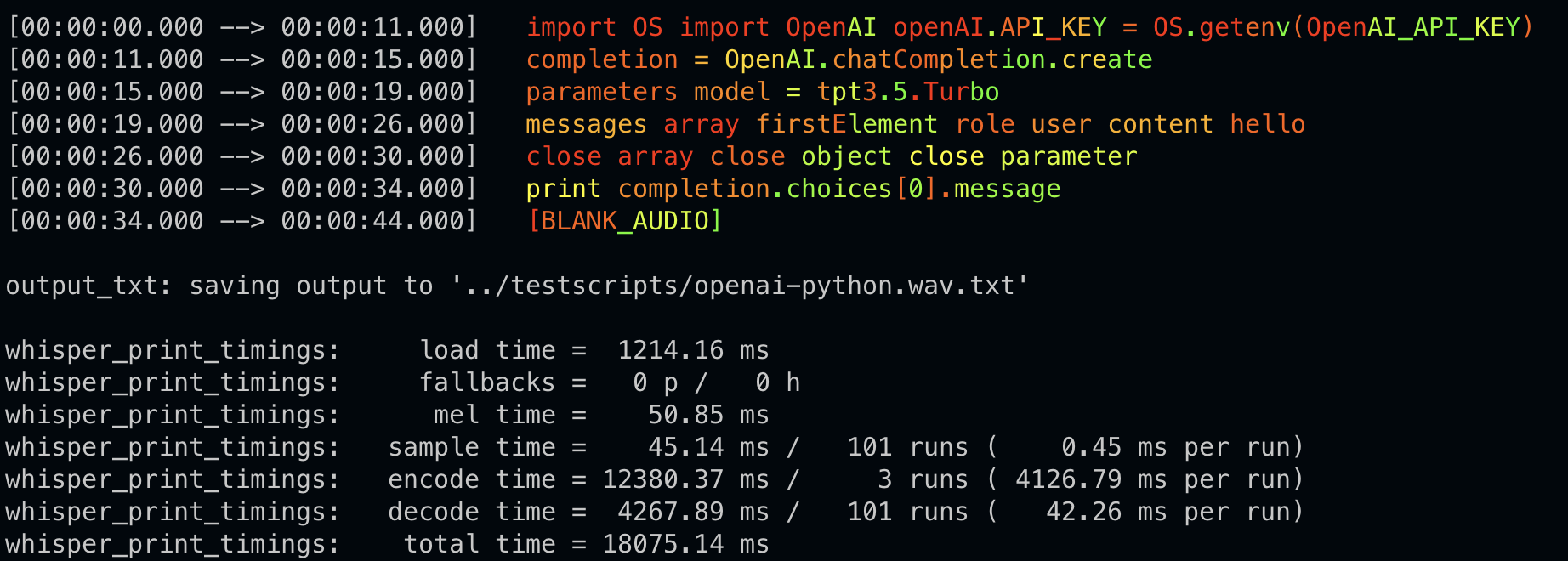

That was actually worse. We'll try one more thing before we move on. The prompt is ```python:

import OS import OpenAI openAI.API_KEY = OS.getenv(OpenAI_API_KEY)

completion = OpenAI.chatCompletion.create

parameters model = tpt3.5.Turbo

messages array firstElement role user content hello

close array close object close parameter

print completion.choices[0].message

Ever so slightly better, but we need help.

§A bazooka to a gunfight

Let's feed our last transcript into GPT-4 with the following prompt, and see if it can, with it's much bigger brain, figure out what we meant instead of what we said.

Prompt:

Here is a transcript of me reading some python code. Please convert it as accurately as you can, into only valid python code. "Import OS, import OpenAI. OpenAI.API_KEY = OS.getenv(OpenAI_API_KEY). Completion = OpenAI.chatCompletion.create(parameters.model = tpt3.5.turbo(messages.array.firstElement.role(user.content.hello)) CloseArray, CloseObject, CloseParameter. Print Completion.choices[0].message

Result:

import os

import openai

openai.api_key = os.getenv("OpenAI_API_KEY")

completion = openai.ChatCompletion.create(

model="text-davinci-002",

messages=[{"role": "user", "content": "hello"}]

)

print(completion.choices[0].message)

Works out of the box! But maybe it knew the answer somehow? I don't see why OpenAI would feed it its own docs, but it's not out of the question.

§Bigger problems

Let's try a more complicated piece of code.

Here's a piece of some python code I wrote over half a decade ago, describing a fire detection engine using cv2:

def fire_analysis_engine(folder,filename):

video = cv2.VideoCapture(folder+'/'+filename)

pointer = int((hist_samples/(temp_max-temp_min))*(threshold_temp-temp_min))

counter = 0

background = None

ret, frame = video.read()

areas = []

deltaXY = []

while(ret!=False):

posX = 0

posY = 0

if(counter==0):

counter+=1

background_mask = cv2.bitwise_not(cv2.inRange(frame, np.array([245,245,245]), np.array([255,255,255])))

continue

original = frame

frame = cv2.bitwise_and(frame,frame,mask=background_mask)

frame = frame[32:144,0:277]

#Start Processing

framehist = np.histogram(cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY).ravel(),bins=256,range=(0,256))

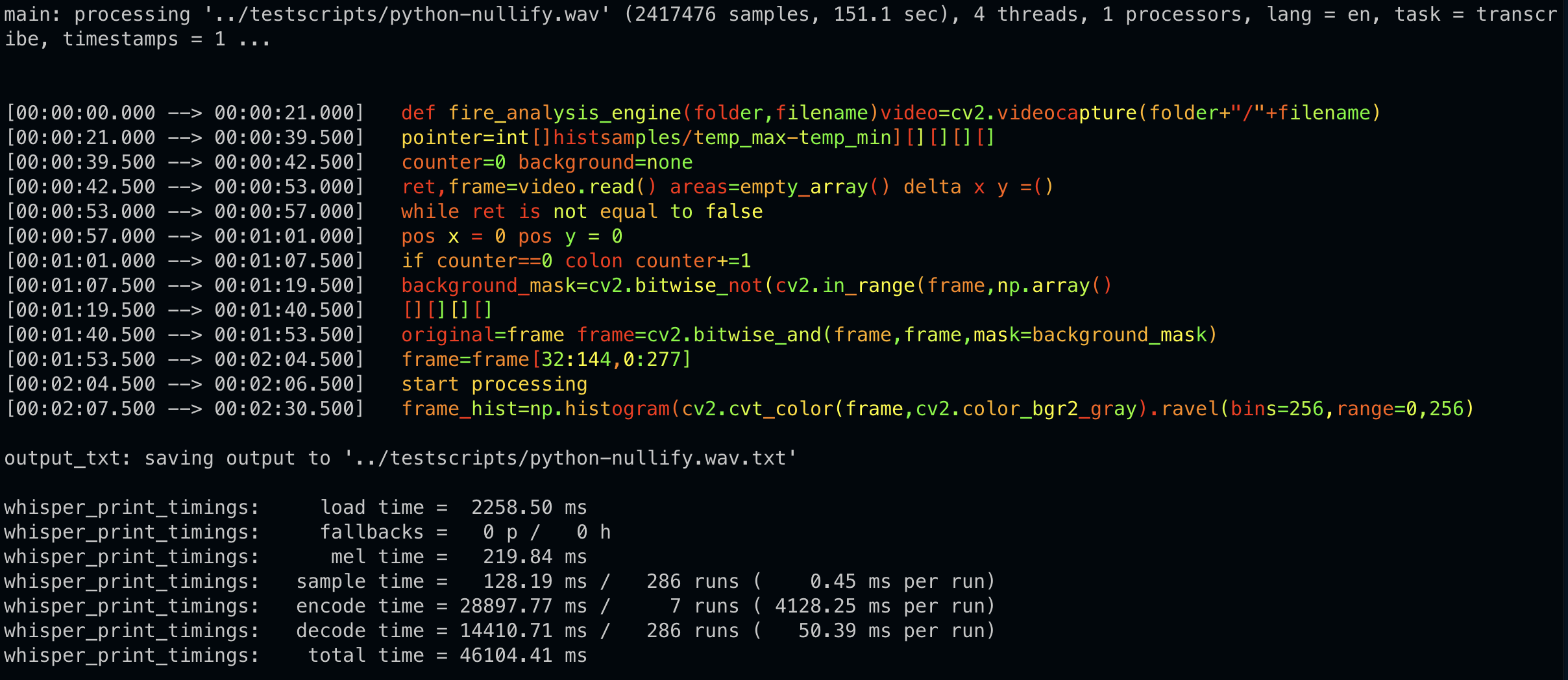

Here's me reading it aloud without much care about how I read it - a lot like reading it to another dev that knows python.

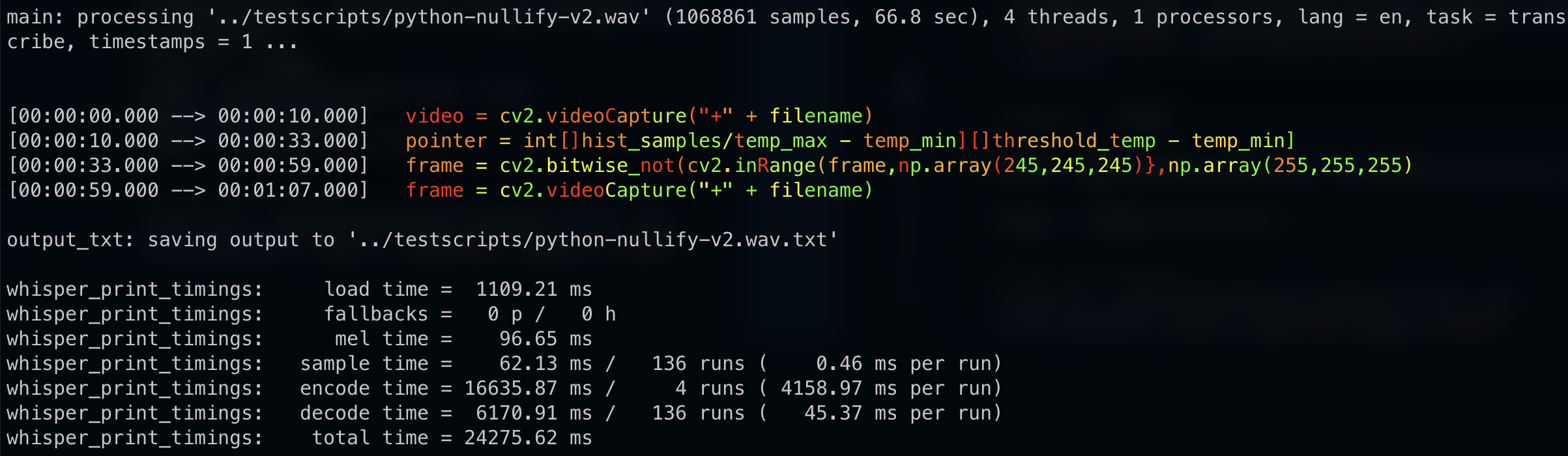

Let's start with no prompt. Whisper.cpp gives us -

def fire_analysis_engine(folder,filename)video=cv2.videocapture(folder+"/"+filename)

pointer=int[]histsamples/temp_max-temp_min][][][][]

counter=0 background=none

ret,frame=video.read() areas=empty_array() delta x y =()

while ret is not equal to false

pos x = 0 pos y = 0

if counter==0 colon counter+=1

background_mask=cv2.bitwise_not(cv2.in_range(frame,np.array()

[][][][]

original=frame frame=cv2.bitwise_and(frame,frame,mask=background_mask)

frame=frame[32:144,0:277]

start processing

frame_hist=np.histogram(cv2.cvt_color(frame,cv2.color_bgr2_gray).ravel(bins=256,range=0,256)

Running this through GPT-4 (same prompt) gives us:

import cv2

import numpy as np

def fire_analysis_engine(folder, filename):

video = cv2.VideoCapture(folder + "/" + filename)

pointer = int()

histsamples = temp_max = temp_min = []

counter = 0

background = None

ret, frame = video.read()

areas = []

delta_x_y = ()

while ret != False:

pos_x = 0

pos_y = 0

if counter == 0:

counter += 1

background_mask = cv2.bitwise_not(cv2.inRange(frame, np.array([])))

original = frame

frame = cv2.bitwise_and(frame, frame, mask=background_mask)

frame = frame[32:144, 0:277]

# Start processing

frame_hist = np.histogram(cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY).ravel(), bins=256, range=(0, 256))

Pretty good! We managed to get most complex parts right. If you listen to the audio, you'll see that I'm trying different methods of describing the same thing. What's amazing is that it's picked up the code comments, and made things make sense.

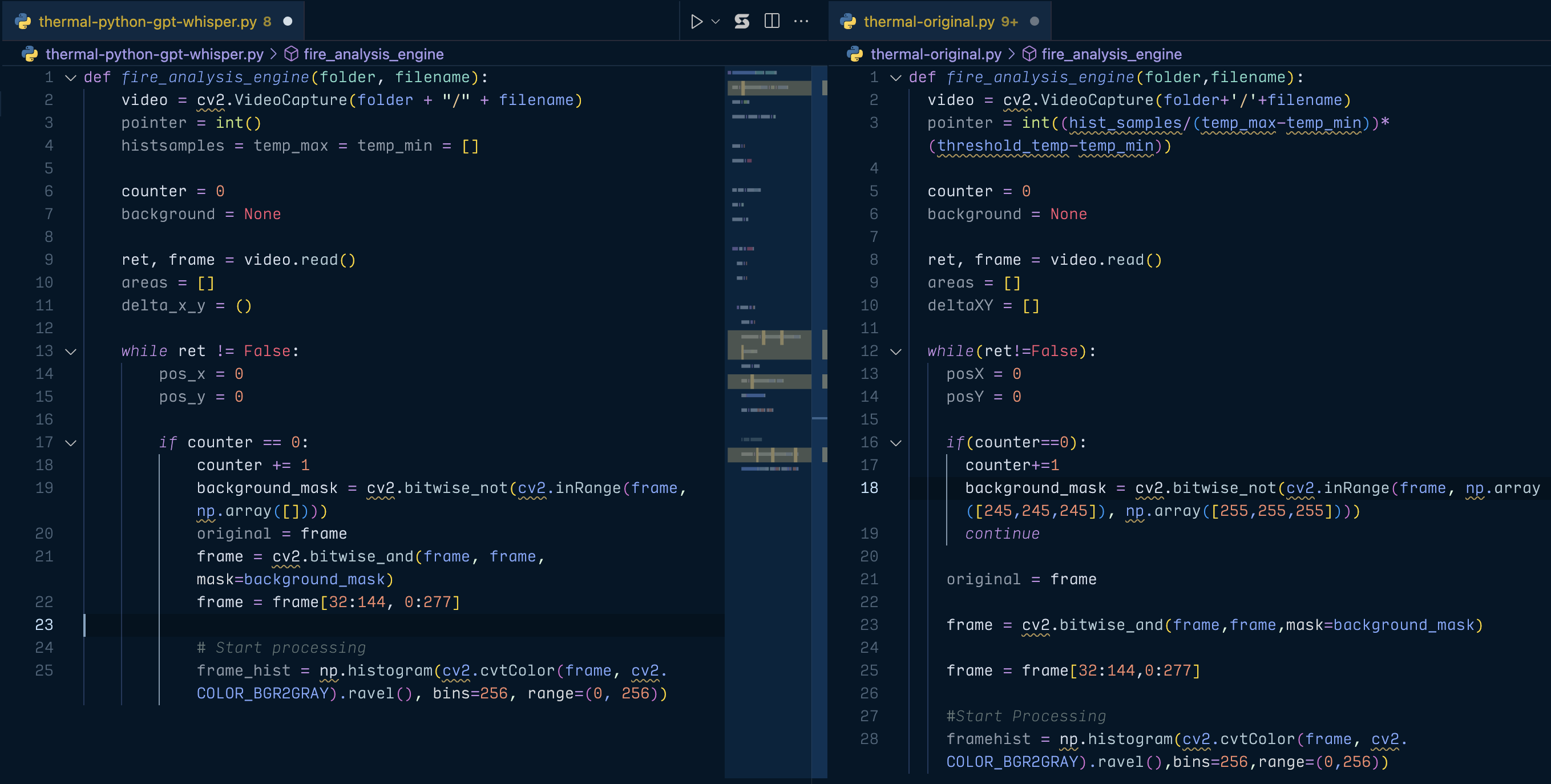

Let's compare side by side. I'll get rid of the additional imports added by GPT (which make sense), and remove some newlines to get things to line up:

The biggest issues were in the pointer and frame variables, and in accurately figuring out parentheses. Let's try and redo our audio for those parts and see if we can't get these lines right. It should make it easier on the engines, but we'll put it in with less context overall.

Whisper?

video equals cv2.videocapture open bracket folder plus forward slash and

quotation marks plus filename pointer equals int open bracket open bracket

hist_samples forward slash open bracket temp_max minus temp_min

close bracket close bracket multiplication sign open bracket

threshold underscore temp minus temp_min close bracket close bracket

frame equals cv2.bitwise_not open bracket cv2.inrange open

bracket frame comma np.array open bracket open square bracket 245 comma

245 comma 245 close square bracket close bracket comma np.array open

bracket open square bracket 255 comma 255 comma 255 close square bracket close

bracket close bracket close bracket continue

Trying to ask Whisper to convert special characters makes it a little better:

video = cv2.videocapture("+" + filename)

pointer = int[]hist_samples/temp_max - temp_min][]threshold_temp - temp_min]

frame = cv2.bitwise_not(cv2.in_range(frame,np.array(245,245,245)},np.array(255,255,255)

frame = cv2.videocapture("+" + filename)`

This is way better for a lot of it, but I worry it might trip up GPT-4 more. Let's try asking GPT-4 to redo the first transcript:

video = cv2.VideoCapture(folder + "/" + filename)

pointer = int((hist_samples / (temp_max - temp_min)) * (threshold_temp - temp_min))

frame = cv2.bitwise_not(cv2.inRange(frame, np.array([245, 245, 245]), np.array([255, 255, 255])))

continue

Better! How about the second?

import cv2

import numpy as np

filename = "example_video.mp4" # You should replace this with your actual filename

video = cv2.VideoCapture(filename)

hist_samples = [] # You should populate this list with your actual data

temp_max = 0 # You should replace this with your actual maximum temperature value

temp_min = 0 # You should replace this with your actual minimum temperature value

threshold_temp = 0 # You should replace this with your actual threshold temperature value

pointer = int((hist_samples[temp_max - temp_min]) * (threshold_temp - temp_min))

frame = video.read()[1]

frame = cv2.inRange(frame, np.array([245, 245, 245]), np.array([255, 255, 255]))

frame = cv2.bitwise_not(frame)

video = cv2.VideoCapture(filename)

I wish I was kidding - but GPT-4 started writing me my entire file.

Let's compare the generated lines:

Original:

pointer = int((hist_samples/(temp_max-temp_min))*(threshold_temp-temp_min))

background_mask = cv2.bitwise_not(cv2.inRange(frame, np.array([245,245,245]), np.array([255,255,255])))

continue

Plain transcription - Whisper + GPT-4:

pointer = int((hist_samples / (temp_max - temp_min)) * (threshold_temp - temp_min))

frame = cv2.bitwise_not(cv2.inRange(frame, np.array([245, 245, 245]), np.array([255, 255, 255])))

continue

Converted characters - Whisper + GPT-4:

pointer = int((hist_samples[temp_max - temp_min]) * (threshold_temp - temp_min))

frame = cv2.inRange(frame, np.array([245, 245, 245]), np.array([255, 255, 255]))

We're pretty close to code complete. Plain transcription seems to be the best way to go.

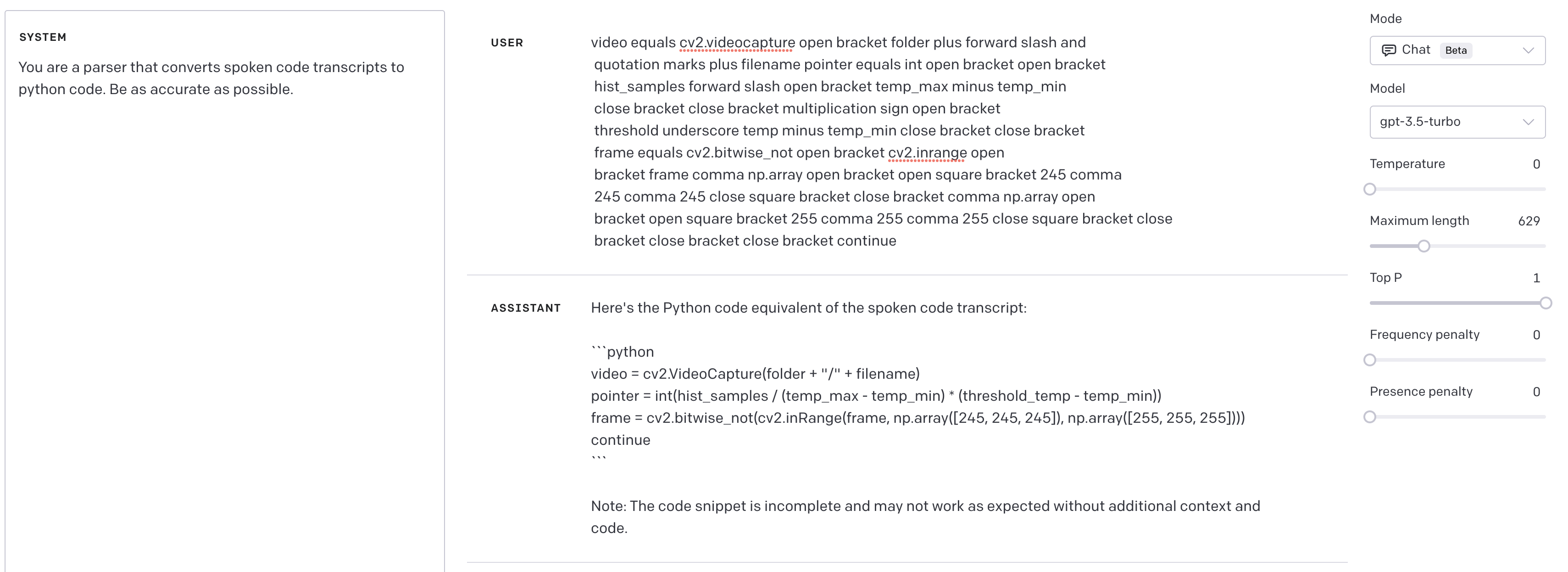

§Local, mobile, social

Let's try and degrade the number of tensors between us and the output, and see when we trip up. First, we can try GPT-3.5 through the API, with a more specific system prompt:

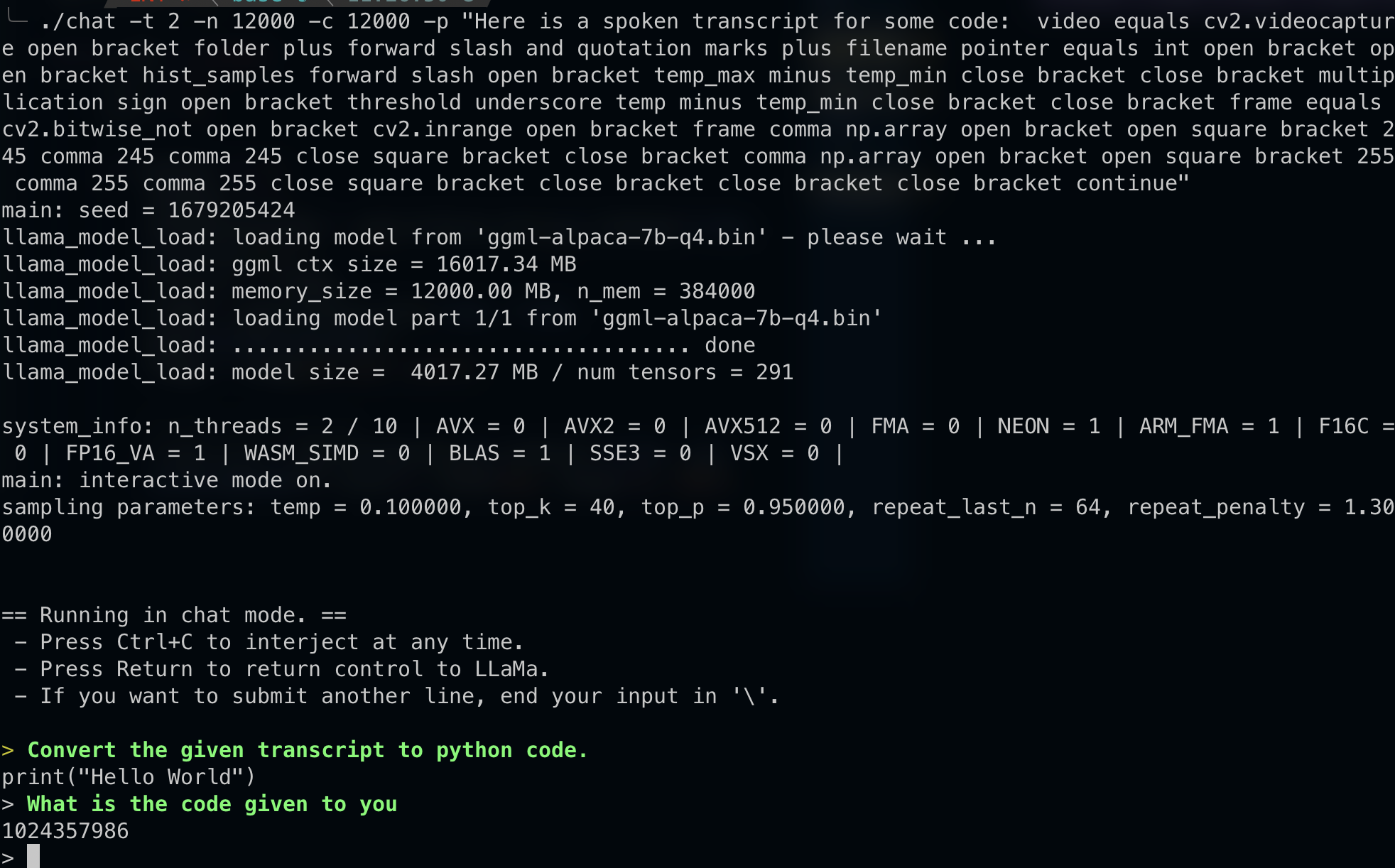

Still pretty good! Gives us a lot more confidence to try local. Let's try the 7B parameter Quantized Alpaca model, and see what we get. Rest assured this is a significant jump so I'm pretty excited:

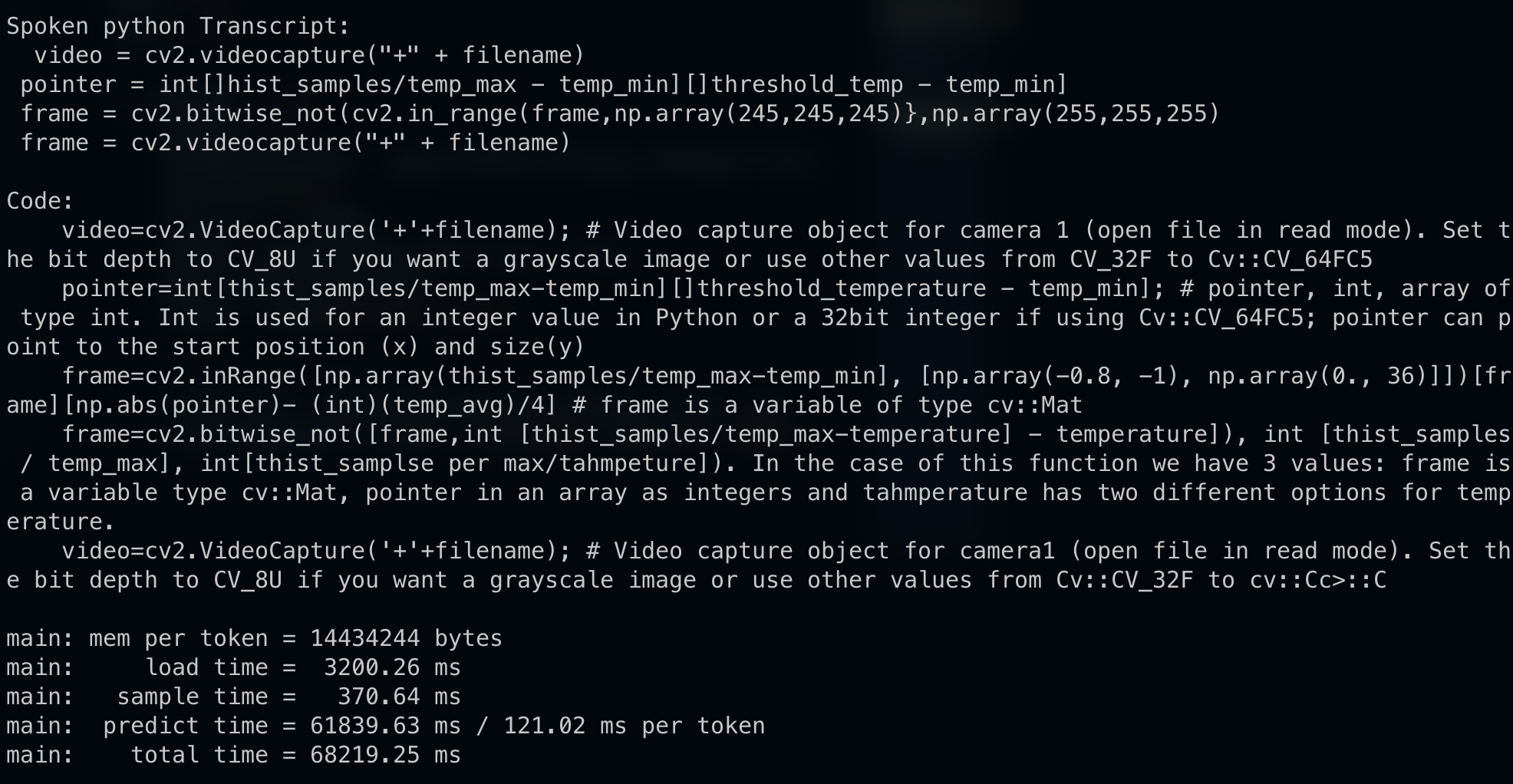

Ouch. Let's try and give it to the unquantized 7B LLaMA:

Convert the following spoken transcript to python code.

Transcript:

video equals cv2.videocapture open bracket folder plus forward slash and

quotation marks plus filename pointer equals int open bracket open bracket

hist_samples forward slash open bracket temp_max minus temp_min

close bracket close bracket multiplication sign open bracket

threshold underscore temp minus temp_min close bracket close bracket

frame equals cv2.bitwise_not open bracket cv2.inrange open

bracket frame comma np.array open bracket open square bracket 245 comma

245 comma 245 close square bracket close bracket comma np.array open

bracket open square bracket 255 comma 255 comma 255 close square bracket close

bracket close bracket close bracket continue

Code:

video = cv2.VideoCapture(filename_pointer) # video file name pointer, not the path!

histogramSamples = np.array([openbracket + 'temp=0;' + openbracket], dtype='int')#reads in an array of commands (a textfile), where the first column is a command and everything after that is data to be passed into it, for example "cmd=192;"

#creates this array from my txt file containing all these lines:

print(histogramSamples) #to make sure they're in there correctly. This should look like the following when reading a textfile with this many commands: [[0 64],[385,192], etc] (note that commas separate each command from its data; you can see by checking my txt file what it looks like)

tempMax = np.array([openbracket + 'tempsum='; openbracket+ str(int(histogramSamples[0][3]))]) #temperature sum (the first column), and tempSum is the command to get that data into a variable in

Seems it gets part of it right, but goes off on a tangent after a while. Using the special character converted transcript - and some prompt adjustments to improve completion - we get some better results, but nowhere near usable.

There is likely quite a bit of parameter tuning required, but the 13B model doesn't perform any better.

§A more elegant language

Let's try something a little older and a lot harder to read - and parse. Remember this painting?

Let's feed our system an (editorialized version of quantize.cpp from Alpaca.cpp:

Code:

#include "ggml.h"

#include "utils.h"

#include <cassert>

#include <fstream>

#include <map>

// TODO: move somewhere else

#define QK 32

// default hparams (LLaMA76B)

struct llama_hparams {

int32_t n_vocab = 32000;

int32_t n_ctx = 512; // this is provided as user input?

int32_t n_embd = 4096;

};

// quantize a model

bool llama_model_quantize(const std::string & fname_inp, const std::string & fname_out, int itype) {

ggml_type type = GGML_TYPE_Q4_1;

switch (itype) {

case 2: type = GGML_TYPE_Q4_0; break;

case 3: type = GGML_TYPE_Q4_1; break;

default: fprintf(stderr, "%s: invalid quantization type %d\n", __func__, itype); return 1;

};

Let's record the audio, and get a transcript (no prompt):

include ggml.h, #include utils.h, include less than, cssert, greater than, include fstream,

include map, comment todo, move somewhere else, define qk32, comment default

hparams in brackets llama76b, struct llama_hparams open curly,

int32_t n_vocab = 32000, int32_t n_ctx = 512, code comment this is

provided as user input question mark, int32_t n_embd = 4096,

close struct, comment quantize a model, function bool llama_model_quantize

first parameter const standard string and fname_int

next parameter const standard colon colon string and fname_out

comma int itype, close bracket open function

ggml_type type = ggml_type_q4_1

all caps, switch open bracket i type close bracket open curly bracket

case two colon type = ggml_type_q4_0

all caps, break case three type = all caps ggml_type_q4_1

underscore separation

semicolon break semicolon default fprintf open function

standard error comma string person test invalid quantization type person d

forward slash n end string comma underscore underscore func

underscore underscore comma i type close bracket

return one close switch end curly bracket

GPT-4:

#include "ggml.h"

#include "utils.h"

#include <cassert>

#include <fstream>

#include <map>

// TODO: Move somewhere else

#define QK32

// Default hparams for Llama76b

struct LlamaHparams {

int32_t n_vocab = 32000;

int32_t n_ctx = 512;

// This is provided as user input?

int32_t n_embd = 4096;

};

// Quantize a model

bool llama_model_quantize(const std::string &fname_int, const std::string &fname_out, int itype) {

GGMLType type = GGML_TYPE_Q4_1;

switch (itype) {

case 2:

type = GGML_TYPE_Q4_0;

break;

case 3:

type = GGML_TYPE_Q4_1;

break;

default:

fprintf(stderr, "Invalid quantization type %d\n", __func__, itype);

return 1;

}

}

How amazing is this?

§Results

This is fantastic news: speech-to-text for code is now actually possible. It would be a short sprint to add stream capabilities, and to plug in the GPT-3.5 chat API so we're auto transcribing as we go. We can also build context with past chunks to make the model better. The only big challenge I foresee is in getting the model to be accurate and not editorialize, but that should be within the capabilities of a system prompt.

We're not at the point where we can get things running completely local, but in this development climate I'd give it a few more days. It's really wonderful times we live in.

Not a newsletter