This is a little more tongue-in-cheek than my usual, but with a serious underlying question. If money, cost and time were no object, what is the best RAG pipeline you can build today?

I'm not just asking for fun. Pipelines today - from what I see having consulted for a good number of companies - suffer from the 'poor man's RAG' problem, where you start with the most basic RAG pipeline, which looks something like this:

- Information comes in (sometimes there's OCR)

- Some kind of chunking happens

- Embeddings are generated

- That's basically it

Often this will yield results just good enough to suggest that a new vector store or a reranker will completely solve all the problems, and you'll have full reasoning over your data.

Yet it always seems one more thing away, customers are never really happy, and while you can point to why your system fails (it's almost always retrieval), there's no real good ways to fix it.

Thus begins the search (I'm not going to link any here) on forums for what the best embedding model is, which vector store is better, and so on. It never ends.

I suspect this is also the reason 'LLMs don't work' posts are still a thing. LLMs are good at approximating answers with bad data. A good chunk of 'LLMs are just overgrown autocorrect' opinions I see - when I've had the misfortune of asking why - boil down to using them badly. You theoretically could use a spreadsheet as just an overgrown calculator, but you probably shouldn't.

Doing it this way is like trying to make a t-shirt by starting with a piece of underwear, asking the question of 'is this a t-shirt yet?' and making adjustments with duct tape.

If you keep going - make the holes bigger to be arm holes, make the body out of tape, tear out a neck - you'll have something that increasingly resembles a shirt, but somehow it still feels uncomfortable.

What if you tried the opposite? Imagine the best system you can, and subtract the things that don't make sense for you.

I'll give you one more reason. We can't necessarily say that the intelligence frontier will continue being pushed, or for how long, but we know that inference will get (at least) 100x cheaper in the short-term. Hardware optimizations, distillation, architectural improvements, economies of scale, model efficiencies are all still visible and only slowly being implemented.

There's a good chance you could do everything in this guide and end up with a dirt-cheap system in two months.

Let's build it - and start at the beginning.

§When in doubt, preprocess

The late-interaction guys had it right - for more reasons than one. Almost any problem you have with your system can be fixed with preprocessing your data.

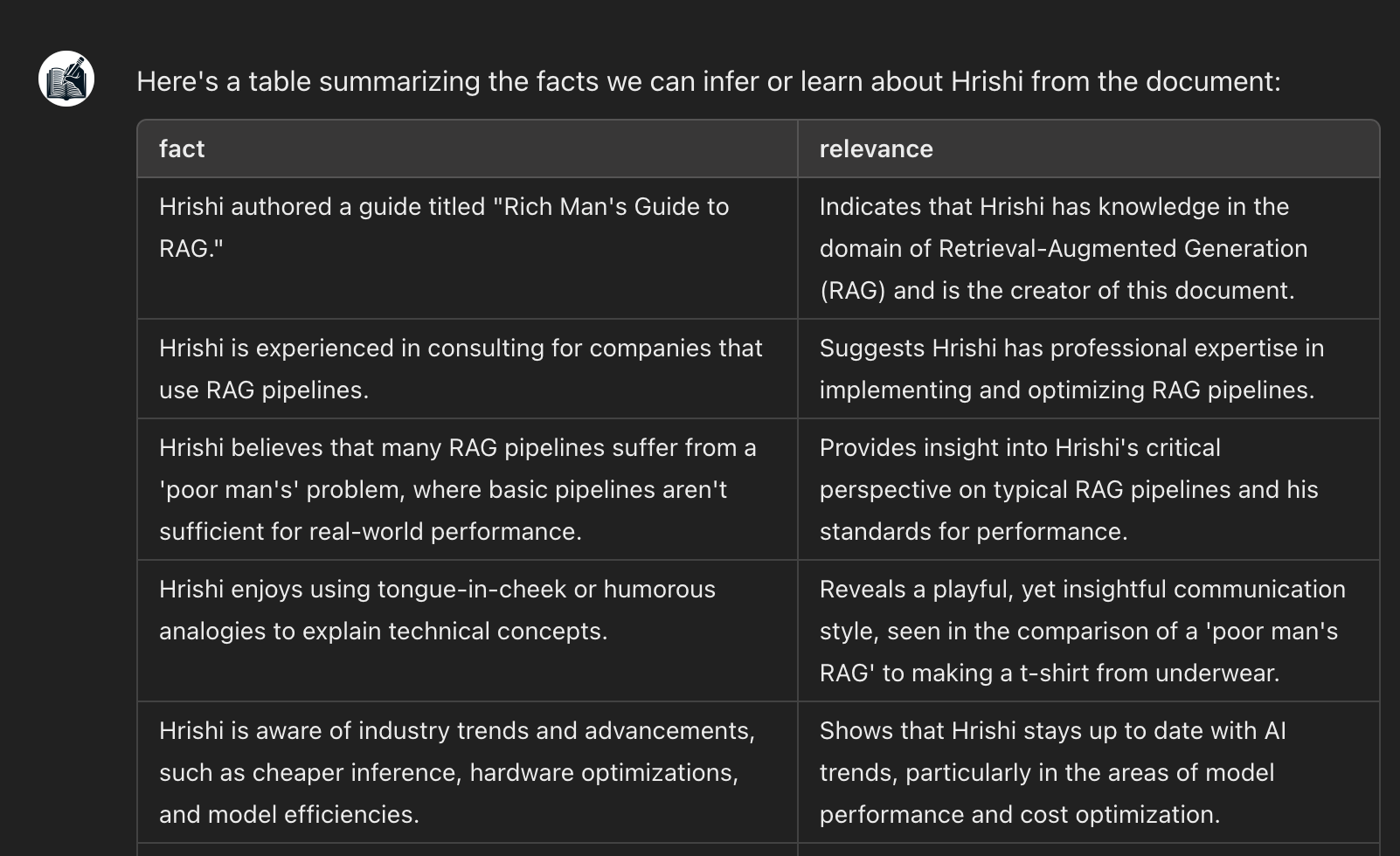

What do I mean? My (now old) guide on Better RAG talks about one example of preprocessing, something I called Fact Extraction at the time. The new Gemma APS model pointed me to the technical name: Abstractive Proposition Segmentation.

Fact Extraction or APS is simple: go through your data with an LLM and ask it to output statements about the world.

As an example, let's run GPT from the old article on the first section of this one:

Any kind of transformation on your input data fits into your preprocessing pipeline. Here are some examples (in increasing complexity):

- Pulling out useful dates and numbers

- Extracting links to external sources

- Extracting tables and storing them separately

- Building and merging a bag-of-words keyword collection

- Generating descriptions of images

- Marking down areas of ambiguity in documents (where things aren't clearly explained)

- Linking documents through the use of pronouns ('this', 'that table', 'figure 7')

This isn't without cost - but we're all rich here. Preprocessing trades off cost and storage for accuracy, with the simple underlying premise that your input data is almost never the right shape to answer your questions (unless you're connecting an FAQ to a chatbot).

§Don't throw your data in the blender

Please. Don't chunk your data.

I won't link it in for the sake of your eyeballs, but watching good data be chunked and embedded is like watching one of those shows where they blend a full meal and drink it.

Sorry - couldn't resist.

Most of the RAG advice I see (from people who should know better) is way worse. It's a lot more like blending meals and handing it to a tiny embedding model that's then asked to recover the original ingredients.

All data has structure - I'm not just talking about tables and graphs. Filenames, dates of creation and modification, titles, subsections, footnotes. Even simple human writing has structure. Paragraphs usually have a topic sentence, elaboration, then a closing line.

Try and preserve this structure. Annotate information as much as possible. Break information where it makes sense to a human. Modern models can easily hold a full document in the context window, usually a lot more. If you can inspect your data to find common formats (or use an LLM to extract typespecs), please do it.

When you preprocess, your aim should be to preserve existing structure, and make implicit structure explicit.

If you like the kitten, consider following me on Twitter. I'm not much for email captures and newsletters, and I usually post shorter things and new articles there.

The funny thing today is that for a lot of datasets, preprocessing it (multiple times!) with a cheap model like Gemini-8b is cheaper than a single question with o1!

§Evals and benchmarks are a process, not a task

In a perfect world, your requirements are so well defined you can start with the test suite. This happens sometimes. Maybe you're trying to augment a team that's answering customer questions, and you have years of emails with questions and answers all well laid out.

If that's you, I'm jealous.

In the world I live in, you usually need to build the system you think users need before you can discover what they'll actually do. If you're lucky you'll get a few hundred questions from users that hint at what they might do with it, but that's the best I've seen.

What this means to you is that building an evaluation suite - questions, good answers, retrieval intermediates - is not something you will ever finish doing. Make it someone's job - ideally everyone's, everywhere, all the time.

Constantly test new models, new pathways, harder tasks. These aren't software test suites. If you ever get to 100% pass, it just means your benchmarks aren't complex enough.

Databricks has some good guides on their own internal benchmarks. What's funny is that the graphs in their articles have become part of my benchmark for multimodal understanding because of how hard to figure out the colors are.

§Trading cost for performance

Did you know you could burn money for performance? This is the equivalent of spitting NOS into your intake, but we're rich here - we can discuss spending more tokens to get that last bit of performance.

If you want to, here are a few ways.

§Consistency

Instead of sending one request to a model, send it ten times at high temperature. Compare the results with another request. If you don't have consensus, the model isn't sure, and it's likely wrong.

§Multi-model validation

Send the same request to different models of the same intelligence tier. You can either trade this off for speed - stream tokens from the fastest responding one - or you can pick the best answer with another request.

§Multi-modal validation

Feed your documents in as a pictures and as text - different requests or the same one. This can sometimes confuse models, but more often than not you'll get better results.

§Task breaking

Take individual prompts and requests and break them down into multiple smaller tasks. e.g. 'Look through this data and compile a table of companies and their revenues' could be 'List all the companies in this data' -> 'List the revenues of this company in this data' n times -> 'Make a table of these companies and revenues'.

The less complex your requests are, the more reliable they'll be. This worked for us a year and a half ago, and it's still true today.

§Use computer

Most LLM pipelines I see today use computers only as model execution environments. It's easy to forget that they're right there - client-side, server-side, these are all computers - and they're really good at things LLMs are bad at. Here's a list of a few things you should ideally use a computer for:

- Arithmetic: LLMs are good at logic but they're not good at math - especially complex math. Integrating a codegen pipeline (which can be as simple and insecure as 'write me the code for X') can help solve this.

- Comprehensive structured search: If you wanted the highest and lowest costs for something from a table, semantic search at 99% accuracy will still be pretty wrong. Keeping structured data and using something like SQL generation will give you more reliable and debuggable results.

- Reproduction: Admittedly they're getting better at this, but LLMs are still pretty bad at reproducing things verbatim (like you and me). If you need to recount things exactly, try either fuzzy matching outputs to the original dataset or getting the LLM to actually query the doc instead of recounting it.

§Agentic Retrieval

If you've done almost all of the things above, you might be in a good place to build an agentic pipeline.

Actually building one is easier said than done. It's best left till the end, when you've got wonderfully stable individual pieces to put together, but here's an example:

- Take the question and transform it a few times to get different variations or simpler components. Run each one through steps 2-8 separately.

- Enrich it with the LLM's internal knowledge (the Astute RAG paper is good place to learn how).

- Use your document and structured index to decide where to look.

- For structured data, generate SQL or code to get reliable output.

- For the document index, look for the best preprocessed intermediate (hypothetical questions? facts? dates?) to see where to start.

- Collect facts and useful information from the results that are relevant to the answer.

- Get references and links (external and internal), follow those (pull data from the web, from your data), etc. and repeat step 3.

- Ask the LLM for additional questions regarding ambiguity in the facts collected. Repeat step 3.

- After n loops, attempt an answer.

- Repeat if you don't have consistency on the answer.

§Ghosts yet to come

I've done my job if you've made it this far, and you're thinking "that's a lot of work - and money/time!"

The intent I have is not to actually build this pipeline - even though it's quickly becoming less of a bad idea. Instead, using the tools here as a template and cutting down to something that best resembles your problem space is a much better way to get to a working system than building up from a roll of tape.

It's often useful to look at patterns from a new perspective. One of the other ways I've been looking at modern AI systems (other than 'what if we were rich') is 'what if our users were impatient'? How would we build the quickest and most responsive system, if we traded off everything else?

Left to the reader :)

Not a newsletter