Building in the AI space has changed how we think about defensibility. The standard question has always been "Is someone else going to build what I'm building, and how much defense do I have against that competition?". The question in AI now has changed to "Will I have a company after the next DevDay? Will new models simply eat my use-case? What about GPT-7.5?"

Even without them, some general feelings cloud our current thinking across the board - as personal customers of AI, builders and founders, customers, or investors. We've all just had our personal worldviews of tech completely shaken with the rise of LLMs. We've watched entire areas of research and development - previously open problems - evaporate, in NLP, vision, and other areas - as one particular type of AI has proved to be able to do them all, setting a new floor for development - and invalidating prior work.

Why spend time and money solving a specific problem, if there will soon exist matrices that can be multiplied together to solve it automagically?

Things that previously needed - and were taking - millions in R&D are now just a prompt away. I know this personally. A project I worked on 8 years ago involved multiple pre and post-processing pipelines, and a heavy amount of CV to answer the question of "Is there fire here? How much fire is there?". Today a vision model will likely beat it at the same question if I just ask.

Understandably, skating to the puck is now more uncertain. For builders, there are the fears I mentioned - and it's similar things for customers and investors. Why buy this thing when two generations from now AI will just do it on command? Why fund or pay the people building solutions now when waiting seems like a good idea? What are the right bets in a space that just invalidated so many prior bets?

Even if you are an individual who has nothing to do with AI, not having a position has become even more dangerous. Modern AI will likely touch and disrupt almost every human job and industry that involves language and communication - and that's all of them.

There are no right answers here, but there is one wrong answer. The only wrong answer I think is not knowing what hypotheses you're betting your future on, as a customer, a founder, or an investor. Knowing them will make you one of the first people to know if you're wrong, and to make sure you move in a cohesive direction.

What's the best way to think about these things? Let's take each perspective. At the end I'll leave you with the specific bets I'm making - any disagreement is welcome.

§The customer perspective

As a customer, there are negatives to investing in AI right now. Most industries are seeing their very first AI use cases getting built, and the first guy through the wall has a heavy cost to pay. He usually pays a higher development cost in time and money, and it's a measure of the uncertainty involved. None of us know what the ideal solutions are, and the cost of walking the Markov chain to discover it isn't small.

However, they'll also leave the biggest mark on the solution. The disproportionate amount of control they have - as first adopters - will define the solutions and workflows the entire industry will revolve around.

This is also not taking into account the economic value of some solutions. A lot of industries have become winner-take-all situations with the help of AI - we just won't know which ones except in retrospect. A lot of them will have their unit economics fundamentally changed with AI, where previously high-COGS services spaces could now be easily scaled and won by early adopters.

The old quote "You won't lose your job to AI, but you'll lose it to someone using AI" will also hold true for companies - in almost any industry.

§Investing in AI

I think defensibility and moat become a lot clearer if we look at companies and products with an understanding of second and third-order derivatives. This means looking at not just where they are today, but also velocity and acceleration.

(The joke is that the third order derivative is called jerk)

To me, this means that every bet in the AI space is a bet on people - often specific people, and the views they hold. OpenAI has proved this in several ways, by having the strongest model in a highly contested and invested-in space since the very beginning.

The one thing we can guarantee is that the current solutions in AI will change - almost nothing will look like it does today. This means that the current state slice of what anyone's building or selling doesn't matter. However, the new versions that become billion-dollar companies are more likely to be built by the same people, who've shown an innate ability to work inside this new paradigm and to stay ahead of the bleeding edge.

This is where it's useful to know - and to state - the specific vision and the specific bets you take as a company. People attract people with the same vision - people with unique positions even more so. If I were betting on the companies that will win in AI today - and I am - I'd bet on people based on speed and acceleration, and I'd bet on people who could tell me their base hypotheses.

Seems only fair that I tell you mine.

§Three bets to structure the unknown

Here are the axiomatic bets we're making:

- Constrained, cooperated and guided models will always perform better than their native versions, at any level of intelligence.

- A massive amount of proper uses for today's AI are held back because of a missing guidance layer, which AI itself will not be able to write for a long time.

- Tokens processed in production and real-world usage is a stronger moat than we think.

Let me explain.

§Constrained, cooperated and guided models will always perform better than their native versions, at any level of intelligence.

This is clear to most people working in AI, but it's still a hard position to hold until it's put this way. This has been true of every single AI model released. All of them - and it stands to reason that most future models - have the problem we've internally come to call 'not knowing who to be'. They're trained on so many tokens and contexts, that despite their general intelligence they're poor at specific tasks unless controlled very closely. Managing the instructions, and the context - which includes trimming, adjustments, task complexity, coordination (things I've written about before) - has become the new open problem in front of us.

Almost all good AI products you see and use are a new way of solving that problem. Copilot has figured out how to represent code complexity in a way that lets you autocomplete, V0 has found a way to structure UI problems with enough context that models can actually be useful there, and so on.

Talk to any company that has taken an AI service to production and you'll hear about this coordination, the things they've had to learn - and how some solutions just seem better or can do impossible things. Clearly, underlying intelligence is not enough, from a model perspective.

This is the core bet of what we're doing - that native intelligence in models will never surpass well-coordinated AI systems that use those same models. This means that core advancements in AI are a headwind. This puts us back to the same competitive space as before, where you're just worried about someone else who can do what you're doing but better - but this is a state of being I'm very comfortable with as a founder.

§A massive amount of proper uses for today's AI are held back because of a missing guidance layer, which AI itself will not be able to write for a long time.

This has more implications than you might initially realize. A good number of people will agree with me on the first part: the ETL pipelines, vision systems, agent patterns and other things that LLMs need to be truly useful don't exist yet. They're being written and rewritten to keep up with changes in technology and customer demands, but they're not as good as they can be.

So I can say that we believe a massive amount of performance and intelligence remains to be unlocked with that guidance layer, and it likely won't be a controversial statement.

However, the sparks of AGI we had with GPT-4 has made us believe that this guidance layer will be written by AI. The belief is that If we could state the task and provide a docker container to write and execute code, GPT-5 will likely be able to do all of this - no human intervention required.

I think we're a long way away from this, for two reasons. For one, correct code is a really far from error-free code: I know this because I write a lot of code that fits the latter but not the former. For another, a lot of this distance between error-free and correct is bridged with human knowledge and intuition, with judgment calls. AI that can write code as complex as we know this layer demands will be pretty close to ASI - which means we'll have new problems. Until then, relying on entirely model generated code is a good way to trade away security guarantees for not much benefit. This is a red herring I think a lot of us have gone down - or will continue to.

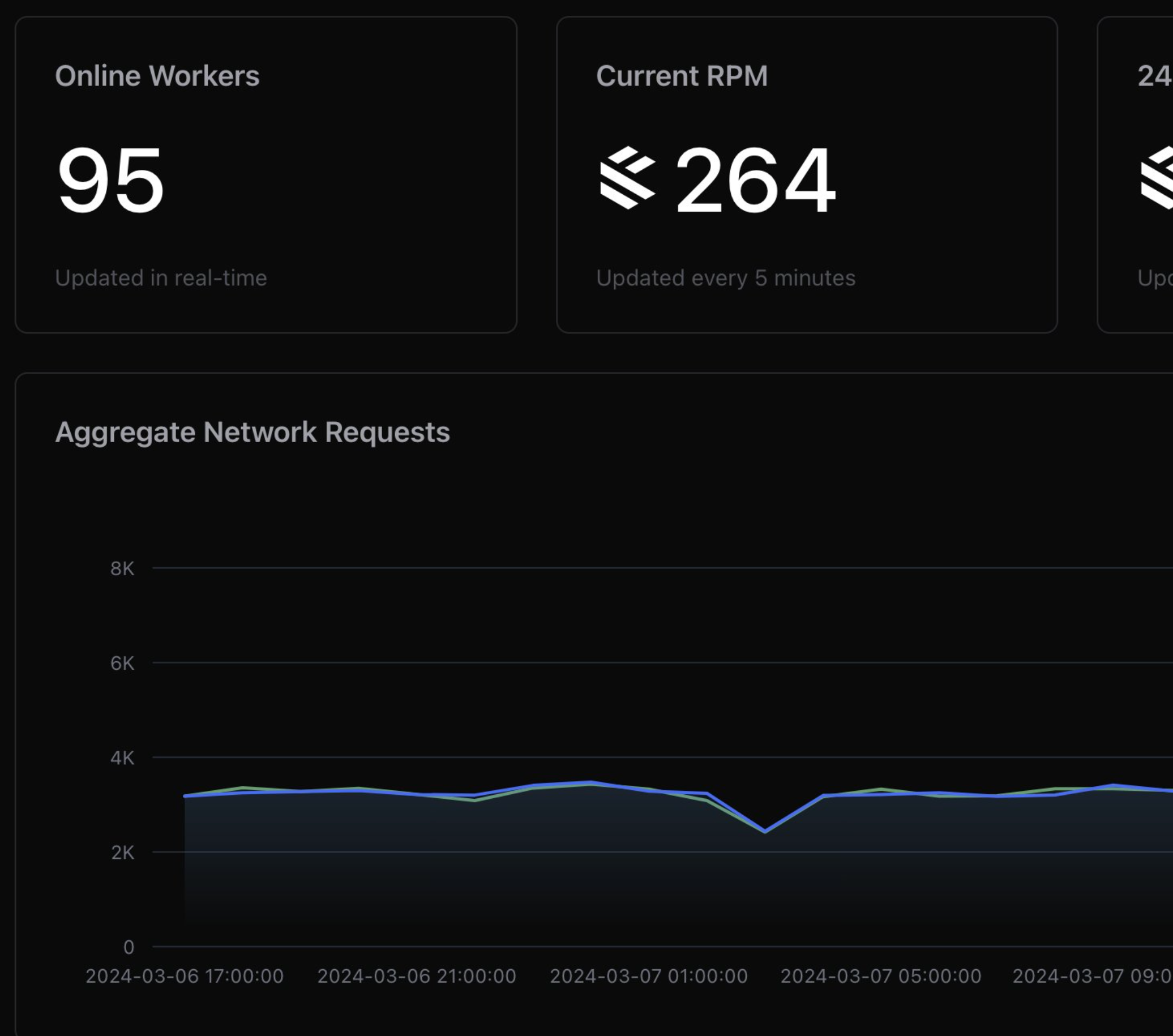

§Tokens processed in production, and real-world usage are stronger moats than we think.

This I think has been one of our biggest strengths. As we built VisionRAG and automated ETL pipelines, we were surprised at a lack of competition - and it began a journey of talking to other companies, reading more research, and finding out why. Chesterton's fence applies to everything we do, and we wanted to learn more about why we seemed to be further ahead.

The strongest reason we can find, has been our proximity to real-world problems and production since day 1. The oldest systems we put in place - WishfulSearch and VisionRAG - are some of our most stable, and it's because of the paths they've taken as they try to solve real problems for real professionals. We firmly believe that this is a moat - and it gets stronger over time.

Being close to real problems has a way of narrowing your focus, and giving you specific end-goals. Tools - and the general growing research in AI - become far easier to navigate. We've tested almost a hundred foundational models, and as we build for more and more customers, it becomes easy to evaluate them. Benchmarks barely matter, when we can tell you exactly how good Goliath-120b is at generating SQL for 20 tables and how that degrades, or how well Mixtral can follow reverse-retrieval tasks.

This means we spend less time meandering and more time perfecting use cases - and UI/UX. If I look at what we do today, can the central concept be copied? Yes - with enough time.

But when I consider the band-aids, rebuilds, and optimizations we've layered on, I can't help but think that anyone going down the same path will have to rediscover them - and take just as long to do it.

When I also look at the internal infrastructure we've had to build to serve these systems web-native with low latency, the length of the drawbridge needed to cross that moat increases.

§The true unknown

All of that said, there is still the boogieman of what comes next in AI. The true unknown unknowns here are the emergent behaviors, the same things that changed the world with GPT-3.5. It's impossible to predict the future using past patterns in the face of emergent unknown behaviors - and that will always be true, whether it's complex reasoning in AI or aliens. But I might have an interesting take here.

What if I told you that since GPT-3.5, we haven't had any more new emergent behaviors? I've had this feeling for a while, and I've been trying to validate this with the people I know - and so far it's gone unchallenged.

Don't get me wrong - we've made massive strides since ChatGPT initially launched - we've improved on the things we found with GPT-4, and we've figured out smaller and faster ways to get the same things. But I can't shake the feeling that we haven't seen new emergent behaviors since the original. I would be happy to be corrected on this, since it would mean a brighter and faster path to proper AGI. It wouldn't change our original hypotheses - it's still hard to guard against true unknowns.

What that means is that we can start making bets about the immediate future of AI based on what we've seen so far. We can expect them to get faster, better, and cheaper at the things we've seen already - tool usage, planning, instruction following, labeling, and all the things so far.

One wildcard is vision models - which are now at the point gpt-3.5-turbo was at launch. We're only beginning to scratch the surface. There is going to be a gold rush to find the new patterns (like RAG, agents, and so on) inside vision models - which are proving to be a completely different beast to LLMs. The number of companies with production experience in this space is tiny, and it's our longest-term bet.

§So what do we do?

The only wrong move here is not knowing what implicit bets you're making - with the way we live our lives, build our companies, and invest in the future. It's okay to be wrong - I often am - but it's hard to know when you're wrong if you don't know what you think. It's been eye-opening to understand how people think in this space. We all have different information sets that lead to different conclusions - and I'd love to hear yours!

§Counterpoints

A strong counter to point number 1, where I said that "that native intelligence in models will never surpass well-coordinated AI systems that use those same models" was that "this might very well be true, but it doesn't mean that we'll opt for well-coordinated AI systems" - from Ben@TheGP.

He's right - solutions win and lose for far more reasons than just performance and quality. VHS won over Beta, and Qwerty won over Dvorak. First to market, price, even the shade of your gradients in a consumer-facing product matter.

This is where I think building for a web makes a difference. Today, it's the largest audience, with the lowest switching costs (you already have the runtime installed), and the most polish when it comes to UI/UX. Adding insult to injury is the fact that WebGPU is still the best way to run cross-platform models natively.

He's still right - but to me it means that we're back in the conventional world of competition, where you have to win on marketing, execution, scale and a thousand other things. I think a lot of people - including me - would be comfortable with that.

Not a newsletter